Data Virtualization vs ETL: Which Approach is Right for Your Business?

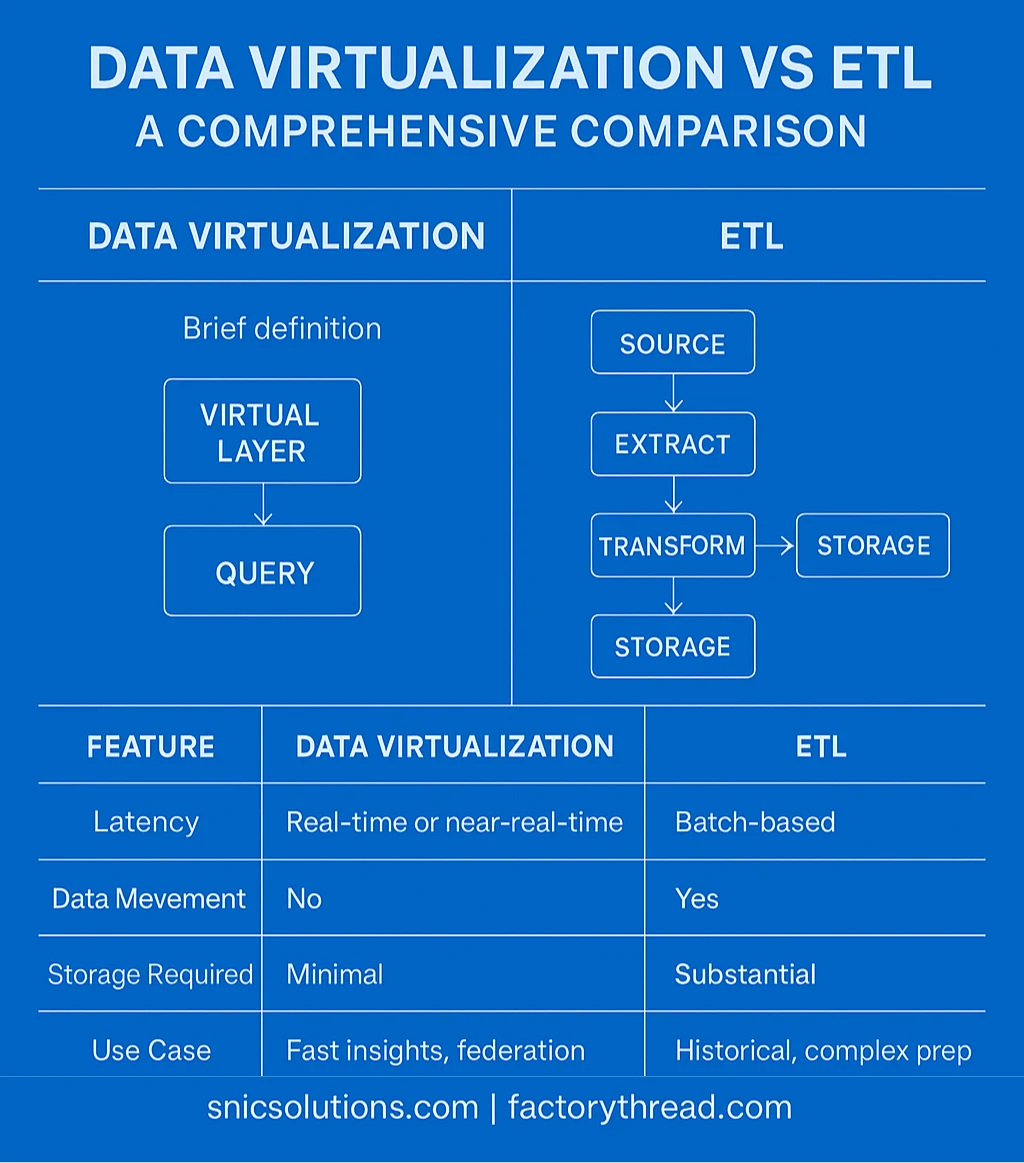

Data virtualization and ETL are two different methods for data integration, particularly in the context of an enterprise data warehouse. Both data virtualization and ETL are integral parts of an effective data management process, enabling efficient access and manipulation of data across various sources.

Data virtualization provides real-time access to multiple data sources without moving the data, while ETL extracts, transforms, and loads data into a data warehouse. This article compares these approaches to help you decide which fits your data management needs better.

Key Takeaways

-

Data virtualization allows real-time access to data from various sources without physical replication, enhancing agility and reducing storage costs.

-

The ETL process involves extracting, transforming, and loading data periodically, making it suitable for handling complex data transformations and historical analysis.

-

Combining data virtualization and ETL can optimize data management workflows, providing real-time insights alongside comprehensive historical context.

Understanding Data Virtualization

Data virtualization is a modern approach to data integration that enables unified access to data from various sources without the need for physical data movement. This method abstracts data from multiple data sources, providing a virtual data layer that can be queried and analyzed as if the data were in a single location, simplifying the underlying complexities through data abstraction.

Seamless data integration through data virtualization enhances relevant data quality, decision-making, and overall data management processes.

In comparison, data federation aggregates data from various sources into a virtual database, but it lacks the flexibility and real-time access offered by data virtualization, making it less suited for modern data management needs.

Definition of Data Virtualization

Data virtualization establishes a virtual data layer. This layer consolidates access to data from different sources without the need for physical movement. This approach allows users to query and interact with data as if it were all in one data warehouse, thereby eliminating the need for extensive data replication and storage. APIs, IPaaS, and ETL are often used to facilitate data integration, but data virtualization stands out by delivering real-time data access and integration.

The primary goal of data virtualization is to provide seamless data access while maintaining data integrity and security. This technology streamlines data management and improves accessibility by abstracting the underlying data, making it vital in today’s rapidly evolving data landscape. Data virtualization makes it simple to access data from various sources, distinguishing it from traditional data integration methods. Additionally, data virtualization allows users to query structured data in real-time from multiple sources, enhancing flexibility and speed over traditional ETL processes.

Benefits of Data Virtualization

One of the most significant benefits of data virtualization is its ability to provide real-time access to data, which is crucial for timely decision-making and operational efficiency. Unlike traditional data management solutions that require physical data movement, data virtualization retains data in its original systems, reducing integration costs and enhancing data security. This approach also supports better governance and compliance by keeping data in its original location without duplication.

Data virtualization technology provides a solution that is both agile and scalable. It eliminates data silos by providing a unified platform that integrates various data sources and enhances data accessibility across systems. It is particularly beneficial for contemporary data management needs. It creates a virtual unified data repository without the complexity of managing multiple platforms, thus facilitating quick integration of new data sources and improving overall data governance. This flexibility and scalability make data virtualization an ideal choice for organizations looking to stay ahead in a data-driven world. Additionally, data virtualization can unify data from multiple data warehouses, presenting a cohesive view without the need for duplicating physical data.

Data Virtualization Tools

A range of tools are available to support data virtualization, each offering distinct capabilities for improving data integration, real-time access, and cross-platform visibility.

FactoryThread stands out as a flexible data operations platform that empowers manufacturers to unify and access data from MES, LIMS, ERP, and IoT systems—without physical replication. Its virtualization layer enables teams to query live data across multiple sources, streamlining analytics and reducing time to insight.

The ETL Process Explained

The ETL process, which stands for Extract, Transform, Load, is a critical method used to move data from source to destination at periodic intervals. The process involves three key steps. First, data is extracted from source systems and then transformed to convert data from various formats into a suitable format before being loaded into a target system, like a data warehouse, for analysis. ETL tools have evolved to convert data from outdated legacy formats to contemporary formats suitable for modern databases.

ETL enhances data quality and business intelligence by improving the reliability, accuracy, and efficiency of data analysis. Additionally, ETL automates data cleansing and processing tasks, facilitating efficient data handling and streamlining data pipeline operations.

Extract

The extraction phase is the first step in the ETL process, where raw data is gathered from multiple sources. It is crucial to extract data from various sources to maintain data quality, handle scalability, and improve analytical processes. This step often involves swiftly copying data to tables to reduce querying time on the source system.

Once the data is extracted, it is typically stored in a staging area where it can be cleaned and transformed before being loaded into the target system. The frequency of data transmission from the data source to the target data store is influenced by a mechanism known as change data capture, highlighting the significance of the target data store in the overall flow of data management.

Transform

The transformation phase is where raw data is transformed and consolidated in staging tables for the target data warehouse. ETL tools replicate data during this transformation, ensuring that data from various sources is accurately integrated and prepared for analysis. This step involves data cleansing, which removes errors and formats source data for the target. For example, values like ‘Parent’ and ‘Child’ can be mapped to ‘P’ and ‘C’ respectively, ensuring consistency in the data.

Furthermore, the transformation process may include format revision, where data is converted into a consistent format, making it easier to work with during analysis. Columns or data attributes can also be divided into multiple columns to optimize analysis.

Derivation applies business rules to the data, allowing the calculation of new values during the ETL process. This phase ensures that the data is structured and ready for effective analysis. Relational databases play a crucial role in this transformation process by providing a structured environment where data can be efficiently stored and queried.

Load

The loading phase of the ETL process, known as data loading, is automated, ensuring that data is continuously processed and moved to the target data warehouse. During a full load, the entire dataset is transformed and moved to the target data warehouse to ensure completeness.

This step is crucial for enabling further analysis and reporting, as it ensures that the transformed data is effectively moved to the target data warehouse or database. Additionally, having a centralized repository for storing transformed data is essential for creating a cohesive data environment, which supports better decision-making and various analytics needs.

Data Integration Methods

Data integration is the process of combining data from multiple sources into a single, unified view. There are several data integration methods, including extract, transform, and load (ETL), extract, load, and transform (ELT), and data virtualization. ETL involves extracting data from various sources, transforming it into a suitable format, and loading it into a target database or data warehouse. ELT, on the other hand, loads the data into the target system first and then transforms it.

Data virtualization, as mentioned earlier, creates a virtual data layer that provides real-time access to data from multiple sources. By using data integration methods, organizations can ensure that their data is accurate, complete, and up-to-date. This unified view of data is essential for making informed business decisions and optimizing operational efficiency.

Read more: Data Integration vs Data Virtualization

Comparing Data Virtualization vs ETL

Data virtualization and ETL serve different purposes in data management, each with its unique strengths and applications. Data virtualization provides real-time data access without the need for physical data replication, making it ideal for scenarios requiring instantaneous insights.

In contrast, ETL involves physical data movement and is essential for consolidating large datasets and performing complex data transformations. Combining both approaches can streamline integration processes, reduce data movement, and improve access speed. Additionally, the choice between data virtualization and ETL can be influenced by factors such as data volume, complexity, and integration frequency.

Real-Time Data Access vs. Batch Processing

Data virtualization excels in providing real-time access to disparate data sources without the need for physical data movement. This capability is crucial for timely decision-making and operational efficiency in today’s data-driven landscape. In contrast, ETL operates on batch processing, aggregating data over specific time periods before processing, which can lead to delays in access.

Data Movement and Storage

ETL necessitates data replication, which can increase storage costs and management overhead. It is essential for merging data from older systems that may not be compatible with newer technologies.

Data virtualization, on the other hand, eliminates the need for physical data movement by providing a virtual view of the data, thus reducing storage costs and improving data management efficiency.

Use Cases and Scenarios

ETL is particularly beneficial in environments where complex data transformations are required and for integrating with legacy systems. Data virtualization shines in scenarios requiring real-time access to unstructured data from multiple sources without the need for physical duplication.

Combining data virtualization and ETL can provide organizations with effective real-time insights alongside comprehensive historical analysis.

Advantages of Data Virtualization Over ETL

Data virtualization offers several advantages over traditional ETL methods, including cost-effectiveness, flexibility, and real-time data access. Data virtualization projects enable the transformation of data from various disparate systems into a unified, real-time view. This modern approach to data management enables businesses to respond to market and regulatory changes swiftly. Data virtualization reduces integration costs and enhances data governance by avoiding physical data consolidation. Additionally, the importance of unstructured data in data virtualization cannot be overstated, as it allows for diverse analytics and improved decision-making.

Agility and Flexibility

Data virtualization allows for quick adjustments to a virtual data source, enhancing agility in data management. This capability supports rapid adaptation to evolving business requirements by enabling quick access to new data sources.

With data virtualization, businesses can prioritize quick insights and respond to changes more rapidly, making it a vital tool for modern data management.

Reduced Data Duplication

Data virtualization significantly minimizes data duplication by accessing data without creating physical copies. Creating a unified view of data without physical copies, data virtualization minimizes duplication and significantly reduces storage costs.

This approach makes data management faster and more flexible.

Enhanced Data Governance

Data virtualization improves data governance by facilitating centralized management of data access. It allows organizations to establish centralized control over data access and security policies, ensuring compliance with data governance regulations.

These measures enhance data governance and improve trust in data management practices.

When to Use ETL Over Data Virtualization

While data virtualization offers numerous benefits, there are scenarios where ETL is more appropriate. ETL is particularly suitable when complex data transformations from various source systems are required and when accurate historical data analysis, such as data mining, is essential.

This method is also preferred for scenarios where data needs to be transformed and stored for extensive analysis. Additionally, ETL is beneficial in data preparation by joining the same data from multiple sources to create a consolidated record.

Complex Data Transformations

ETL processes are optimal when data requires extensive multi-step transformations. ETL can handle intricate transformations that involve multiple data sources and formats, making it effective for deriving meaningful insights from raw data.

Complex transformations often require significant logic and processing before analysis, which ETL is well-suited to handle.

Historical Data Analysis

ETL provides deep historical context for an organization’s data, allowing for comprehensive analysis over time. Enterprises can utilize ETL to combine legacy data with data from new platforms and applications, enhancing their historical analysis capabilities.

This approach enables organizations to analyze long-term trends effectively.

Integration with Legacy Systems

ETL is often necessary for integrating with older systems because these systems may not support modern data access methods. Common challenges of integrating legacy systems include incompatible data formats, lack of real-time processing capabilities, and outdated technologies that do not support current standards.

Business Intelligence Applications

Business intelligence (BI) applications rely heavily on data integration and data virtualization. BI tools use data from various sources to provide insights and trends that can inform business decisions. Data virtualization is particularly useful in BI applications, as it provides real-time access to data from multiple sources. This enables business users to analyze data from different sources, such as customer relationship management (CRM) data, sales data, and marketing data, to gain a comprehensive understanding of their business. By using data virtualization and BI tools, organizations can make data-driven decisions and stay ahead of the competition. The ability to access and analyze data in real-time ensures that businesses can respond swiftly to market changes and customer needs.

Hybrid Approaches: Combining Data Virtualization and ETL

Combining data virtualization and ETL offers a comprehensive solution for modern data management. APIs facilitate data delivery by enabling real-time access and integration, allowing users to connect their applications and retrieve live data from different sources. Utilizing both approaches can streamline the integration of diverse data sources and enhance overall data management workflows.

Organizations can leverage the strengths of both data virtualization and ETL to achieve greater efficiency in data management. This hybrid approach is particularly beneficial when working with multiple data warehouses, as it ensures compatibility among different data sources and presents a unified view of data without duplicating physical data.

Optimizing Data Management Workflows

Combining data virtualization with ETL allows organizations to leverage the real-time data access of virtualization while also handling complex data transformations through ETL. This hybrid approach optimizes data management workflows by providing a unified access point for disparate data sources and ensuring compatibility with modern applications.

Real-Time Insights with Historical Context

Data virtualization enables real-time access and integration of data from various sources, allowing organizations to derive insights instantly. Combining this capability with the comprehensive historical context provided by ETL allows for a more robust analytical framework, where real-time data can be analyzed alongside historical trends to support informed decision-making and strategic planning.

Implementing Data Virtualization and ETL

Effective implementation of data virtualization and ETL can significantly enhance data accessibility and integration. Integrating these technologies allows organizations to streamline the data management process, lower infrastructure costs, and enhance overall efficiency and responsiveness.

Data federation also plays a crucial role in data integration by providing a unified view of data from various sources, although it lacks the flexibility and real-time access offered by data virtualization.

This hybrid approach offers the best of both worlds, combining the real-time capabilities of data virtualization with the robust data transformation capabilities of ETL.

Choosing the Right Tools

Selecting the right tools for data integration is crucial for the success of data virtualization and ETL projects. An effective data integration tool should support various data formats and offer flexibility in managing data sources.

Data quality tools, ETL tools, and reporting tools must be evaluated based on their ability to meet the specific needs of the organization and ensure seamless data integration and management to integrate data effectively.

Best Practices

Implementing best practices is essential for the successful deployment of data virtualization and ETL solutions. Comprehending and evaluating the source data is crucial to avoid issues later in the process. Implementing logging and setting checkpoints during ETL processes allows for effective tracking and recovery from failures.

Modularizing ETL components enhances reusability and simplifies maintenance, while an alert system for errors ensures prompt responses to issues. Optimizing solutions with parallel processing and data caching can significantly reduce processing time and improve performance.

Common Challenges and Solutions

Organizations often face challenges in ensuring data consistency across multiple sources during the implementation of data virtualization and ETL. Managing large databases can slow down ETL processes, while ensuring data security remains a critical concern. Addressing these challenges requires a comprehensive approach, including robust data governance policies, efficient data management processes, and the use of advanced tools and technologies to ensure data integrity and security.

Data Access and Security with FactoryThread

FactoryThread enhances data access and security by enabling real-time connectivity to manufacturing systems—without requiring data to be moved or duplicated. Its virtualization layer abstracts data from its physical sources, providing secure access controls that restrict visibility to authorized users only.

By centralizing how data is accessed across MES, LIMS, ERP, and IoT platforms, FactoryThread helps manufacturers maintain compliance with industry regulations while protecting sensitive operational and quality data. This architecture supports fast, secure decision-making on the shop floor—where timing and accuracy are critical.

With FactoryThread, teams gain trusted access to the right data, at the right time, without compromising security or governance.

Summary

In summary, both data virtualization and ETL play pivotal roles in modern data management. Data virtualization offers real-time access, flexibility, and reduced data duplication, making it ideal for agile environments. ETL, on the other hand, excels in complex data transformations, historical data analysis, and integration with legacy systems. Combining these approaches can optimize data management workflows, providing a logical data warehouse for organizations with the agility and comprehensive insights needed to stay competitive in today’s data-driven world. Embracing these technologies will enable organizations to harness the full potential of their data, driving innovation and informed decision-making.

Frequently Asked Questions

What is data virtualization?

Data virtualization is a contemporary method of data integration that allows unified access to data from various sources without the need for physical data movement. It establishes a virtual data layer that facilitates seamless querying and analysis.

How does ETL differ from data virtualization?

ETL focuses on the processes of extracting, transforming, and loading data into a target system, while data virtualization enables real-time access to data without the need for physical replication. This distinction allows organizations to choose the approach that best fits their data management needs.

What are the benefits of combining data virtualization and ETL?

The combination of data virtualization and ETL provides organizations with real-time data access alongside robust data transformation capabilities, thereby optimizing workflows and enhancing overall efficiency in data management. This integration equips businesses to respond swiftly to data-driven decisions.

When should ETL be used over data virtualization?

ETL should be used over data virtualization when complex data transformations are needed, accurate historical data analysis is essential, and integration with legacy systems is necessary. It is the preferred approach for scenarios requiring transformed data storage for in-depth analysis.

What are some common challenges in implementing data virtualization and ETL?

Implementing data virtualization and ETL often faces challenges such as ensuring data consistency across diverse sources, managing the performance of large databases, and maintaining data security. To effectively gather data and address these issues, it is essential to establish robust data governance policies and leverage advanced tools and technologies.

Share this

You May Also Like

These Related Stories

Data Mesh vs Data Fabric vs Data Lake

Data Virtualization vs Data Federation

No Comments Yet

Let us know what you think